There was a line in this blogpost about what it’s like to QA Kinect games that really caught my eye.

The cameras themselves are also fidgety little bastards. You need enough room for them to work, and if another person walks in front of it, the camera could stop tracking the player. We had to move to a large, specially-built office with lots of open space to accommodate for the cameras, and these days I find myself unconsciously walking behind rather than in front of people so as not to obstruct some invisible field of view.

(my emphasis).

It sounds strange when you first read it: behavioural change to accommodate the invisible gaze of the machines, just in case there’s an invisible depth-camera you’re obstructing. And at the same time: the literacy to understand that there when a screen is in front of a person, there might also be an optical relationship connecting the two – and to break it would be rude.

The Sensor-Vernacular isn’t, I don’t think, just about the aesthetic of the “robot-readable world“; it’s also about the behaviours it inspires and leads to.

How does a robot-readable world change human behaviour?

It makes us dance around people, in case they’re engaged in a relationship with a depth-camera, for starters.

Look at all the other gestures and outwards statements that the sensor-vernacular has already lead to: numberplates in daft (and illegal) faces to confuse speed cameras; the growing understanding of RFID in the way we touch in and out of Oyster readers – wallets wafted above, handbags delicately dropped onto the reader; the politely averted gaze whilst we “check in” to the bar we’re in.

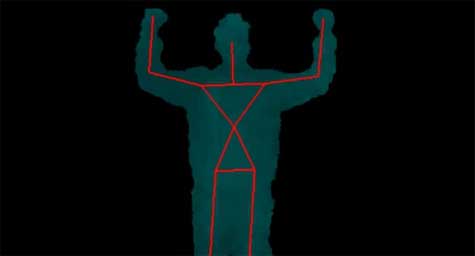

Where next for such behavioural shifts? How long before, rather than waving, or shaking hands, we greet each other with a calibration pose:

Which may sound absurd, but consider a business meeting of the future:

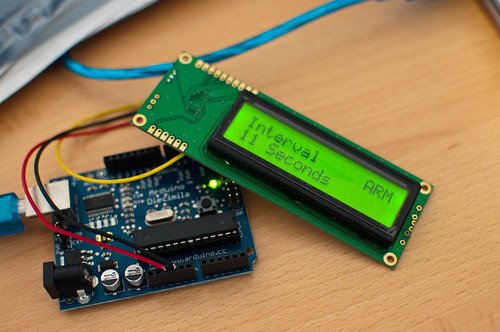

I go to your office to meet you. I enter the boardroom, great you with the T-shaped pose: as well as saying hello to you, I’m saying hello to the various depth-cameras on the ceiling that’ll track me in 3D space. That lets me control my Powerpoint 2014 presentation on your computer/projector with motion and gesture controls. It probably also lets one of your corporate psychologists watch my body language as we discuss deals, watching for nerves, tension. It might also take a 3D recording of me to play back to colleagues unable to make the meeting. Your calibration pose isn’t strictly necessary for the machine – you’ve probably identified yourself to it before I arrive – so it just serves as formal politeness for me.

Why shouldn’t we wave at the machines? Some of the machines we’ll be waving at won’t really be machines – that telepresence robot may be mechanical, but it represents a colleague, a friend, a lover overseas. Of course you’d wave at it, smile at it, pat it as you leave the room.

If the robot-read world becomes part of the vernacular, then it’s going to affect behaviours and norms, as well as more visual components of aesthetics. That single line in the Kinect QA tester’s blogpost made me realise: it’s already arriving.