Time to write up something that’s been sitting around on various disks for a while.

Many months ago, I saw Plotagon. It’s best explained as Xtranormal by way of The Sims: reasonable resolution, 3D-animated videos based on scripts; a desktop tool to generate them, and a site to host them.

Most interestingly, it’s scripted with what actually looks like movie scripts, and that got me thinking: what would it look like to feed it with procedurally generated scripts? Could you make the machine make videos? All I knew was two things:

- I know, for good or ill, how Markov chains work.

- All the scripts for Friends are transcribed on the internet.

After all, given Plotagon’s focus on semi-realistic forms, I decided that it was best suited to the great American artform of the 20th century: the sitcom.

The Infinite Friends Machine was born.

The machines does a few simple things. First, it scrapes Friends transcripts. For now, it works for most of Series 1. It then parses those scripts and chops them up into episodes, scenes, and lines attributed to individual characters. It also strips out some directions. Then, using all that, it offers ways to generate new scripts.

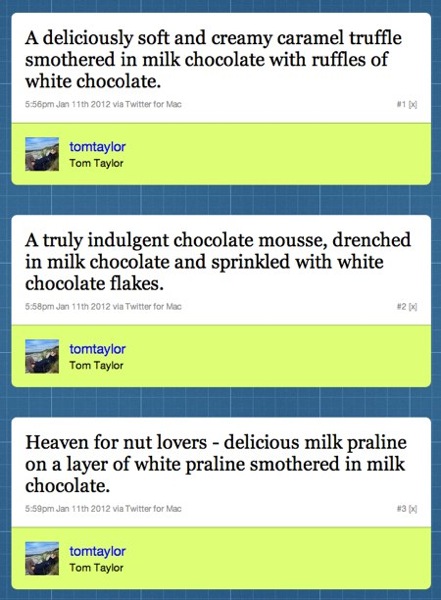

Markov Chains, as Leonard has frequently pointed out, are not always the best way of generating text alone, especially when the corpus you’re working from isn’t particularly consistent. He is, of course, right. Still, I enjoy the mental leap readers make in order to make generative prose actually make sense, and for this project, I mainly wanted to get to scripts as fast as I could.

Still, I didn’t want to hamper their relative crudeness, so I tried to skew things in their favour. To that end, the Infinite Friends Machine generates scripts by copying the structure of existing scripts. When it makes a new “episode”:

- it finds the scenes that are in the original episode it’s being copied from

- for each scene, it finds each line – who says a line at what point in the episode

- then, it generates a new line for the speaking character from their own corpus. That is: Joey only ever things derived from Everything Joey Has Ever Said. What this means is that the main cast have quite diverse things they might say, and the bit players pretty much only say the same thing. Gunther is quite boring.

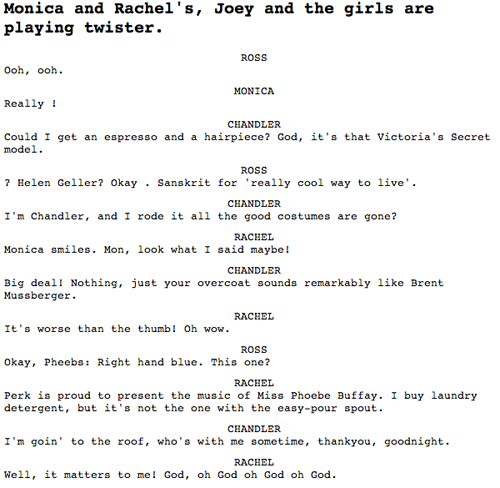

That’s it. A few seconds later, it spits out a nonsensical episodes of friends. Here’s a scene:

The machine isn’t online because it’s quite crude and processor-intensive, but you can get at the sourcecode from Github.

Anyhow: machine to generate scripts. Next stage: get them into Plotagon.

This was where my troubles began. For starters, despite having a nice format for scripts, Plotagon really demands you enter them via its UI – you can’t paste a big block of text in, you have to enter it by hand. Painful.

Next: Plotagon only lets scenes have two characters in. I decided to make a single scene – the tag on the end of the episode. But this turned into many scenes in Plotagon, as four people in an apartment was a bit much for it. I had to keep track of who was where, who was talking to whom at any point.

And then I had to deal with the unfortunate truth: Plotagon is horrible. I mean, Xtranormal used its non-realistic avatars and computer-voices to comic extent. By contrast, here we had disappointing voice acting with clunky visuals. Also, I had to add some ‘acting’. This largely consisted of making Chandler say everything whilst doing the (crazy) emote, to really capture that Series 1 Matthew Perry vibe.

A quick sting later, and Infinifriends S1E1 existed:

It is not exactly high art.

Just one scene took long enough, and I think, proved my point to an extent, but probably can’t be improved on for now. I’m not sure if I’ll ever return to the Infinite Friends Machine, but it was an entertaining enough exercise, and the video rendition is probably worth it for the cringe factor alone.

Theme tune. Credits. Tune in next time.